Logs are a primary component of observability. They are records of activities in a system, providing insights into its health and performance. Managing logs is challenging due to the high data volume and the need for efficient storage.

However, with log aggregation, handling the complexity and growth of log data becomes simple. Log aggregation simplifies log management. This approach streamlines the analysis and monitoring of logs from cloud-based and local IT environments.

Continue reading to learn more about what is the meaning of log aggregation—how it works, and why it is crucial.

What Does Log Aggregation Mean?

Log aggregation is collecting and converging log data from servers, applications, networking devices, and other sources in an IT infrastructure. It involves using tools and systems to gather logs into a unified format and store them in a single location for easier analysis, monitoring, and management.

Applications and services are continuously deployed on multiple cloud servers and regions. Tracking their operations and performance with logs can become complex. Moreover, if you’re building and running applications in Kubernetes, you need to access log events by going through each pod individually in a command line interface (CLI) tool. Fortunately, log aggregation addresses these dilemmas.

Log aggregation provides a consolidated view of logs from all resources. This lets users monitor cloud infrastructure health, troubleshoot issues, detect threats, and guarantee compliance with regulations. Log aggregation's insights make cloud resource management and optimization more proactive.

What are Logs?

Logs are chronological records of events in an application or a system. It gives a detailed view of every action—what happened, where and how it happened, and who was involved.

Log data can come from web servers, application codes, databases, network devices, and more. Once users setup logging, their systems automatically generate events, typically displaying the following:

Timestamp

Event Description

Severity Level

User or System Metadata

Logs help IT teams gain insights into the performance and behavior of an application. They assist in diagnosing issues, looking out for suspicious activities, and improving system security and reliability.

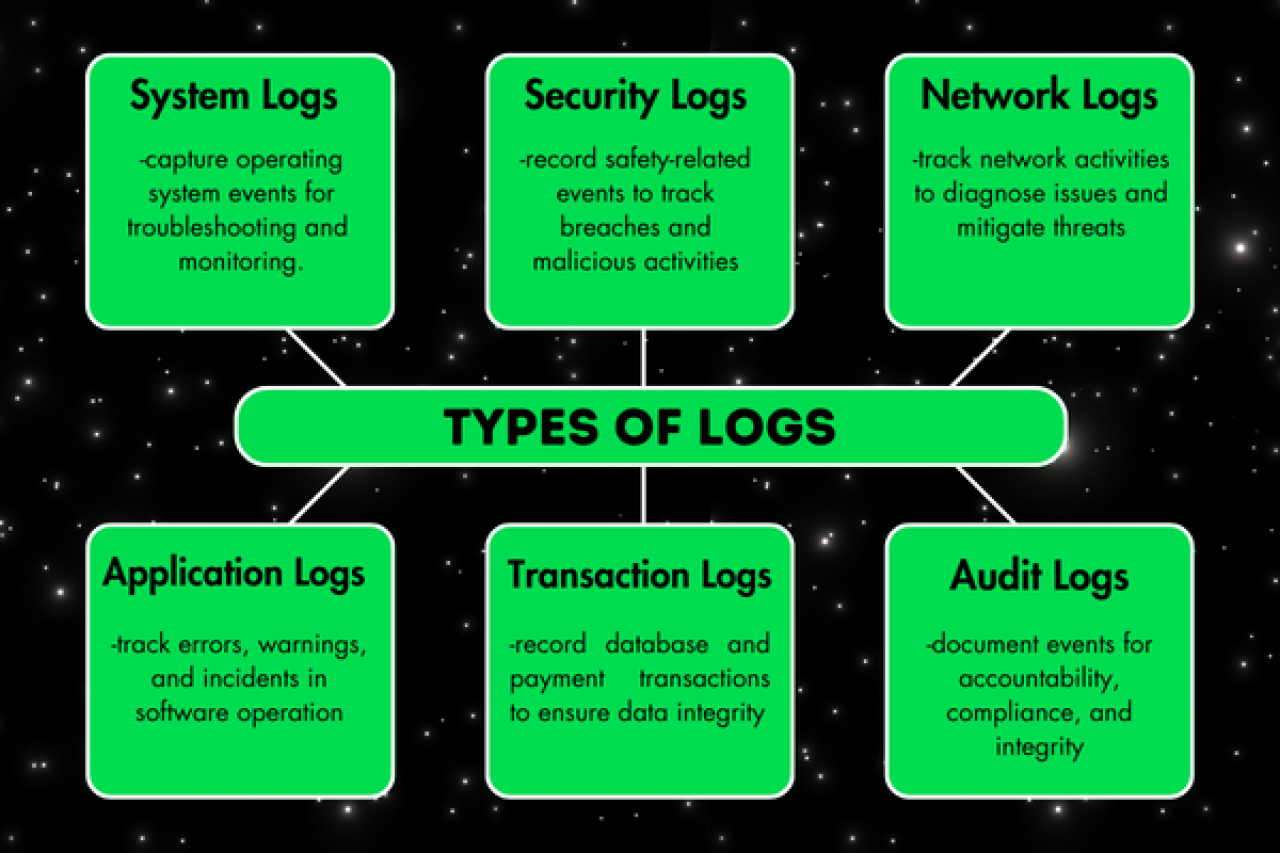

Types of Logs

There are different types of log files, all serving different purposes. They are crucial in ensuring efficient operations, monitoring security, and adhering to regulatory standards.

Here are the primary types of logs:

System Logs

System logs are records of the relevant events in the operating system. They capture shutdowns, reboots, and failures to provide insights into the health and operations of the system.

Examples of system logs are boot logs, kernel logs, and system error logs. This log type is typically used to troubleshoot hardware and software issues, monitor system performance, and ensure the system runs as expected.

Security Logs

Security logs document any event related to the safety of a device, application, or system. These records track potential security breaches, unauthorized logins, and malicious activities.

Organizations can quickly identify risks using security logs like firewall logs and IDS logs. They also help accelerate the investigation and resolution of threats.

Definition: Intrusion Detection System

Intrusion Detection System (IDS) is a security technology that monitors the network or system for any malicious actions or violations. It analyzes data packets in the network to look for patterns and identifies anomalies.

IDS is essential in the early detection of cyber threats by assisting in identifying and stopping attacks before they can cause significant damage.

Network Logs

Network logs document activities related to network operations—like traffic flow, device status, and network anomalies. Logs for router, switch, and DNS are typical samples of network logs. By recording every activity in the network, companies can quickly diagnose issues and eliminate potential threats to their networks.

Application Logs

Application logs are produced by events related to the operation of specific software programs. They track any error, warning, or incident about the application’s behavior.

Web server logs, database logs, and event logs help debug errors, understand performance issues, and analyze user behavior within the application.

Transaction Logs

Transactions in databases and applications generate transaction logs. Logs for database and payment transactions are recorded to ensure data integrity. These logs also assist in data recovery and audits for security purposes.

Audit Logs

Audit logs secure the accountability of a system by recording actions taken by the user or the system. It documents any configuration on user permissions, important files, and transaction logs. This log type ensures the system's compliance with any regulatory process. It is often used in compliance auditing, forensic analysis, and ensuring the integrity of critical operations.

Why is Log Aggregation Important? Main Benefits

Log aggregation is essential because it centralizes logs from various sources into a single, manageable location. This approach makes it easier for organizations to monitor and analyze all the data in their IT environment.

Managing logs can be challenging. The volume of data generated by modern systems

and applications is growing daily. Each infrastructure component generates its own logs in different formats. The segmentation hinders troubleshooting efforts and complicates monitoring the system’s health and performance.

Without log aggregation, identifying the root cause of issues becomes time-consuming. It can eventually lead to oversight of urgent warnings, delayed response times, and prolonged downtimes.

The lack of log aggregation makes it almost impossible to acquire insights into a system's behavior. By centralizing log management, users can get a unified view. This practice also allows for more efficient troubleshooting and more effective system monitoring.

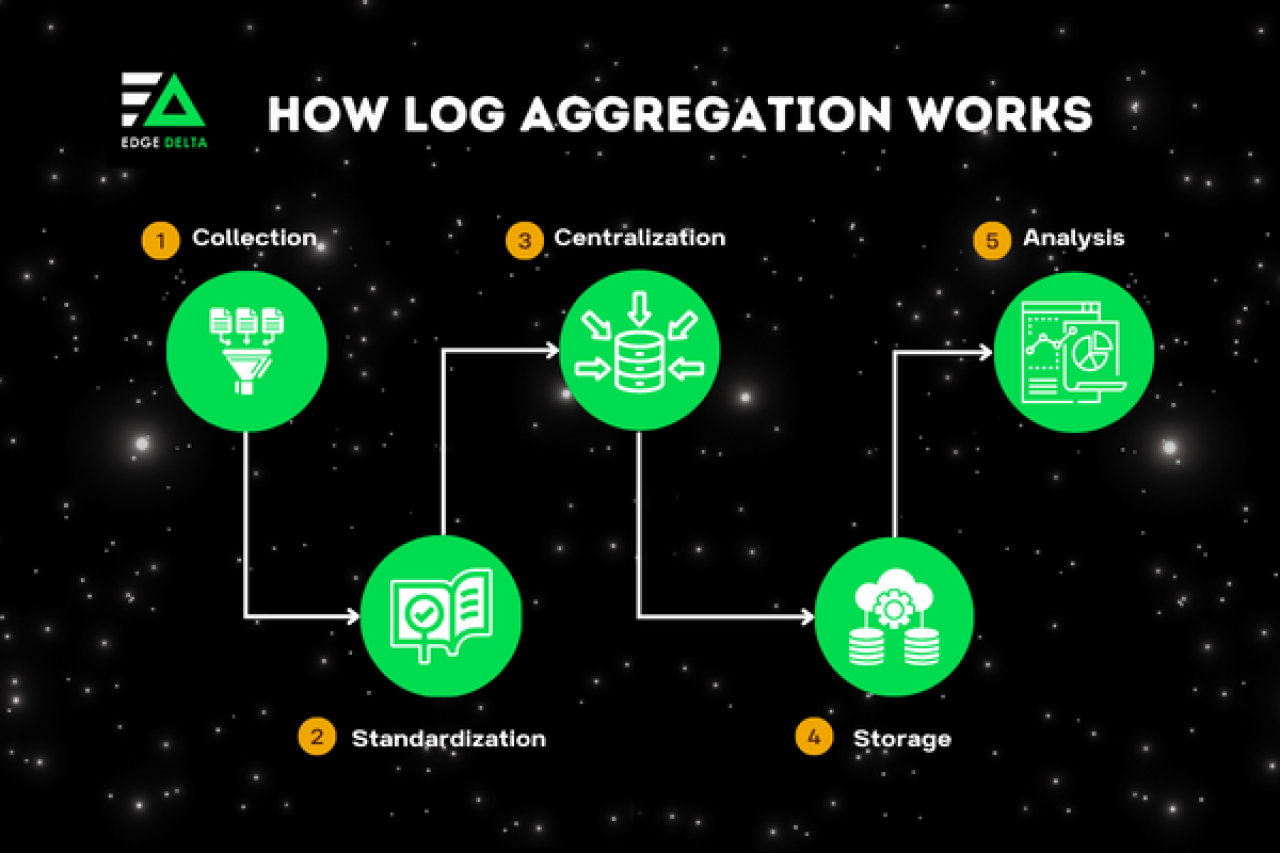

How Does Log Aggregation Work? Step-by-step Guide

Log aggregation is critical for monitoring, analyzing, and troubleshooting systems. It helps companies understand their operations and identify any issues within their infrastructure.

The log aggregation process can be divided into several key steps:

1. Collection

Log data is gathered from servers, applications, network devices, security systems, and other sources across the IT environment. Collection mechanisms typically rely on agents to collect and ship log data.

2. Standardization and Parsing

Since logs come from various sources, they often follow different formats and criteria, so standardizing them is crucial. This step often begins with parsing logs to extract structured information from raw log entries. From there, teams can convert all collected log data into a common format for easier processing and analysis.

3. Centralization

Once the logs are standardized, they are centralized into a single repository. This makes storing, managing, and analyzing data across the entire IT environment from one location easier.

4. Storage

The centralized log data must be accessible and cost-effective. Storage options can range from on-premises databases to cloud-based storage services. They often include features for managing the lifecycle of logs—like archival and deletion policies.

5. Analysis

Once the logs are stored, they are used to support multiple analytical use cases. Teams can process log data to populate real-time monitoring dashboards and support alerting. More commonly, teams will run queries to locate relevant log data as part of an investigation or root-cause analysis.

The insights log data provides can help organizations enhance decision-making and optimize their system or applications.

In some cases, there is a sixth step of archiving log datato comply with data retention policies.

Future Log Aggregation Trends

While teams will continue practicing log aggregation, many will also begin processing data upstream as it’s created. Companies create exponentially more data today than they were even a few years ago due to the shift to cloud and microservices-based architectures, as well as the increase in digital-first experiences.

As a byproduct, relying solely on centralized log management practices creates high costs and slow performance. By pushing data processing upstream, teams can extract insights from their logs as soon as their created. This will result in cost optimization as well as faster insights.

This approach also complements the growing demand for smarter automated aggregation solutions that proactively predict system issues. AI/ML technologies will continue to enhance real-time data processing and anomaly detection. Organizations can acquire a more proactive approach to system maintenance and cybersecurity by integrating log aggregation with such technologies.

A trend towards more scalable, cloud-native solutions that can handle data growth from IoT devices, microservices, and cloud apps also emerges. These solutions will need flexible and scalable aggregation tools to let businesses effectively manage their logs regardless of the volume or format.

Lastly, there will be a greater emphasis on privacy and data protection. Log aggregation tools must incorporate advanced data anonymization and encryption techniques – ideally before storage (or indexing) – to protect sensitive information while providing valuable insights.

Conclusion

Log aggregation simplifies the management of IT systems. It centralizes logs from various sources into a single format for easy monitoring and analysis. This approach is key to proactively managing, securing, and optimizing systems.

Data's growing volume and complexity can overwhelm traditional management methods, leading to challenges in security and compliance. However, with log aggregation, organizations can streamline monitoring and troubleshooting. It also enhances decision-making and system optimization.

Log Aggregation FAQs

What platform provides log aggregation?

Log aggregation tools usually have observability features for a complete experience. Splunk, Edge Delta, and Datadog are some of the best platforms you can use. Their real-time monitoring, visualization, and alerting capabilities cater to various logging needs.

What are the two differences between a log aggregation system and SIEM?

A SIEM tool can be used for log aggregation. However, when people refer to log aggregation systems, they're typically referring to log management platforms. Log aggregation systems centralize and manage logs. They focus on data storage and searchability. Meanwhile, SIEM adds advanced security features to address potential threats. The critical difference is that SIEM offers deeper security analysis and integrated response capabilities beyond simple log management.

What are the disadvantages of aggregation?

Log aggregation can be expensive and difficult, especially when handling large data volumes. It also requires skilled personnel for setup and maintenance. This approach can lead to data overload, making insight extraction easier with advanced tools.